About Me

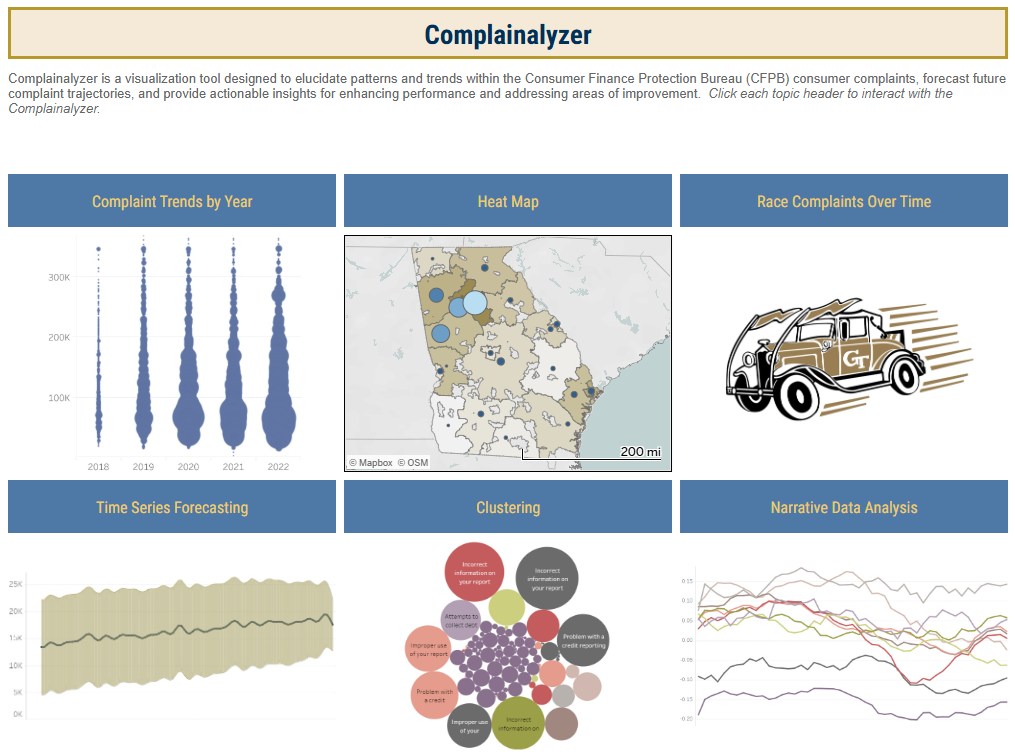

I'm Maluhia, I love working on machine learning models as well as process analysis and improvement. Currently I am a founding member of the Solutions Engineering team at Paramark, a startup in San Francisco working on Marketing Mix Models for enterprise level marketing organizations. I'm a full stack data professional, working in Python to do ETL on client marketing data, training and tuning ML models, and designing, analyzing, and recommending marketing experiments. I take analysis work off the software engineers' plates and help guide the work on the product that I use now and that the clients will use in the near future! I have an MS in Analytics from Georgia Institute of Technology and a BS in Computer Science from BYU-Hawai'i.

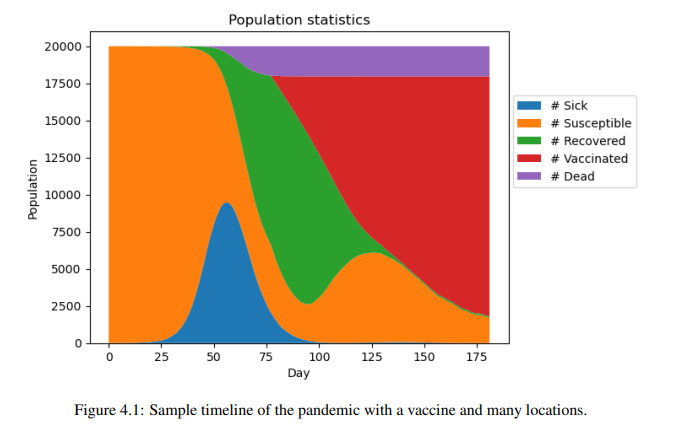

Thumbnail.png)